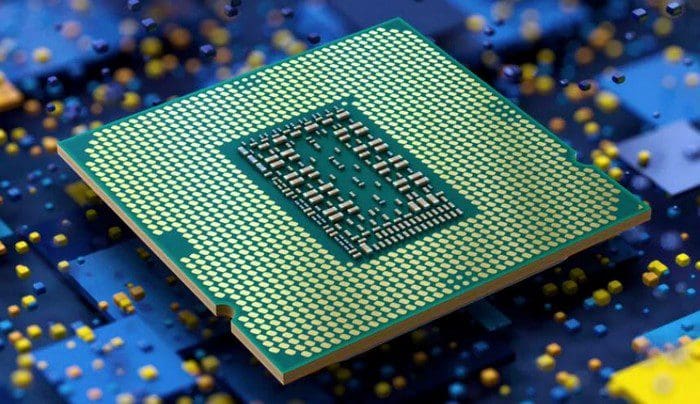

CPUs are incredibly complex beasts. There are a huge number of interconnecting parts that all have to work in perfect unison to achieve the levels of performance that we see. Memory performance is a key factor in the performance of modern CPUs, specifically as a limiting factor.

Contents

Why is memory speed so important?

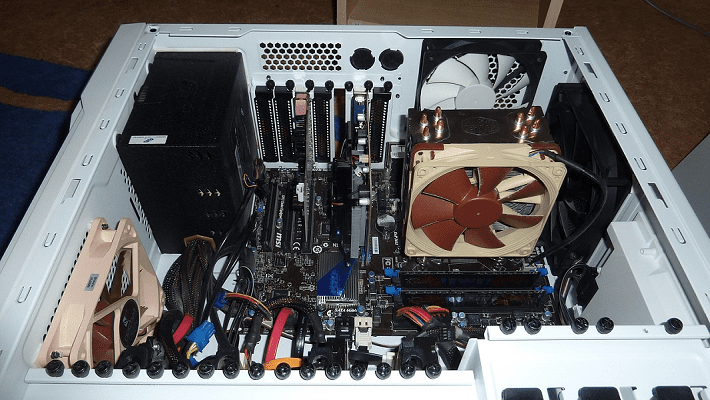

CPUs are incredibly fast, with the latest generations running at 5.7GHz when adequately cooled. This allows them to complete 5.7 billion operations every second. Many of these operations perform an action on some type of data that needs to be stored in memory.

Main system memory, known as RAM, is also very fast. Unfortunately, it’s very fast when compared to anything other than the CPU. The absolute latency on modern high-end RAM is on the order of 60 nanoseconds. Unfortunately, that translates to roughly 342 CPU cycles. To speed up memory access, a CPU cache is used that dynamically caches data. This cache is located on the CPU die itself and uses SRAM cells rather than DRAM cells making it a lot faster. Unfortunately, the CPU cache is also a lot smaller than system RAM, generally not totalling even 100MB. Still, despite its diminutive size, the tiered CPU cache system massively increases system performance.

Here comes virtual memory to mess everything up

Modern computers utilise a system called virtual memory. Rather than allocating physical memory addresses to processes, virtual memory addresses are used. Each process has its own virtual memory address space. This has two benefits. Firstly, it provides easy separation between memory that belongs to one process and memory that belongs to another. This helps to prevent attacks where malicious software reads data from the memory of other software, potentially accessing sensitive information. It also hides the physical memory structure from the process. This allows the CPU to move rarely used bits of memory to a paging file on storage, without necessarily unmounting it from virtual RAM. This allows the computer to gently manage scenarios where more RAM is required than is physically present. Without virtual memory, some data would have to be removed from RAM likely causing at least one program to crash.

Unfortunately, if you use virtual memory addresses, the computer needs to actually translate those virtual memory addresses to physical memory addresses to read the data. This requires a table to store all the translations of virtual memory addresses to physical memory addresses. The size of this directly depends on the amount of RAM in use. It’s generally fairly small, at least when compared to the capacity of system RAM. Unfortunately, if you store the translation between virtual addresses and physical addresses in RAM, you need to make two requests to RAM for every request to RAM. One to find the physical address to request and then another to actually access that location.

Enter the translation lookaside buffer

The solution to this problem is to store the translation table somewhere faster. The CPU cache would fit the bill nicely, at least from a speed perspective. The problem with that, however, is that the CPU cache is tiny, and already heavily utilised. Not only does the table not fit in the cache, but doing so would disrupt its already performance-defining use.

Of course, if the principle of the cache already works for memory access, why not repeat it for the translation table? And that is exactly what the Translation Lookaside Buffer, or TLB, is. It’s a high-speed, cache for recent address translations. It isn’t big enough to store the entire table, but its small size means that it can respond very quickly, within a single clock cycle.

Any memory request goes via the TLB. If there’s a TLB hit, it can provide the physical memory address for the actual request, typically adding a single cycle of latency. If there’s a TLB miss, the lookup has to be performed from main memory. There’s a small performance penalty to a TLB miss of around 5 cycles, a loss more than eclipsed by the memory access latency. Once the address translation is retrieved from system RAM, it is pushed into the TLB and the request is then repeated with an immediate TLB hit.

Note: There are different schemes for TLB eviction. Some may use a First In, First Out, or FIFO scheme. Others may use a Least Frequently Used or LFU scheme.

In the rare instance that there’s no entry in the address translation table, a page fault is caused, as the requested data is not in RAM. The OS must then handle the fault and transfer the data from storage to RAM before the request can continue.

Conclusion

The Translation Lookaside Buffer, or TLB, is a high-speed CPU cache dedicated to caching recent address translations from the page file in system RAM. This is necessary as virtual memory systems, as implemented in all modern computers, would necessitate two requests to RAM for every request to RAM. One to translate the virtual memory address to a physical memory address, and another to actually access the physical address. By caching recent translations memory latency can be greatly reduced for TLB hits.

Care must be taken to ensure that cached translations are relevant to the currently active process. As each process has a different virtual address space they can’t be reused. Not strictly limiting this was the cause behind the Meltdown vulnerability.