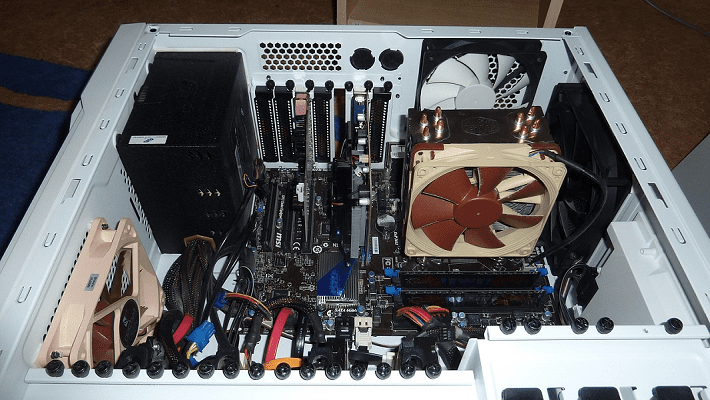

One of the key things to know about computers is that not everything runs at the same speed. This can lead to some nasty security vulnerabilities and accuracy and stability issues. One of the slowest components in a computer is the HDD. HDDs are slow because they have mechanical moving parts, a spinning disk, and an actuator read/write arm.

Conversely, the CPU is the fastest component of a computer. If the CPU tries to write some data to the HDD as fast as the CPU can output it, the HDD won’t be able to keep up. This would generally result in a significant amount of data loss and complete corruption of the data.

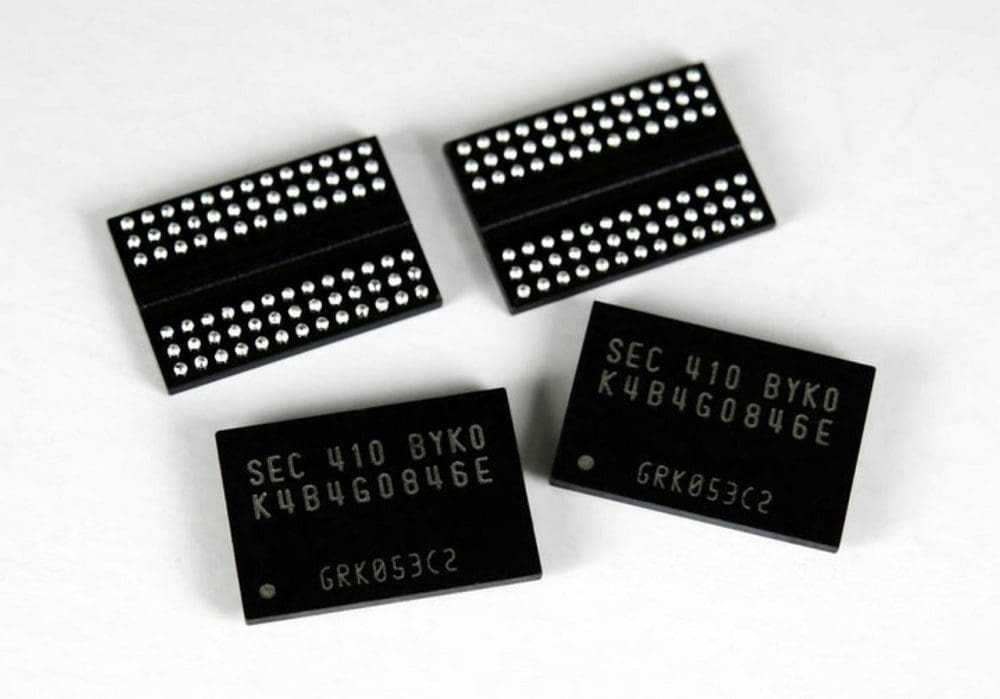

An intermediate memory device is used as a buffer to get around this issue. For example, the CPU can transfer the data to the much faster system RAM as fast as the RAM can take it. RAM is a lot more suitable for temporarily holding the data as it has a lot more capacity than the CPU’s extremely limited direct memory capacity.

The data can then be sent over the SATA bus to the HDD from the system RAM. On the actual HDD, another set of memory acts as a read/write buffer. The data can be stored temporarily until the drive is ready to write it to the spinning disk.

The use of extra memory layers as buffers help to ensure that data is not lost because it can’t be written as quickly as it is being transferred to the writing media. Buffers also allow for optimizations of both read and write operations.

Optimizations

Let’s consider a scenario where three operations must happen on an HDD. The data is read in the third operation directly following the data being read by the first operation. The second operation is in a separate location and requires the actuator arm to move away to a different disk.

In a purely FIFO (First-In, First-Out) queue, the HDD would waste a bunch of time moving the arm to the location of the second operation only to move it straight back. This isn’t an issue if these operation instructions have enough separation. However, if they are queued up simultaneously, there are some noticeable performance issues. This essentially leaves the disk idle for the seeking time and significantly delays the third operation. Without a proper or large enough buffer, performing these operations in order would be necessary.

The operations can be optimized with a large enough buffer to store the relevant data. The third operation can be re-ordered to be performed directly after the first as they follow each other now means there’s no seek time. This then applies a minimal delay to the second operation while significantly speeding up the third.

Here the buffer directly increases the performance of the drive by enabling optimizations.

A Graphics Example

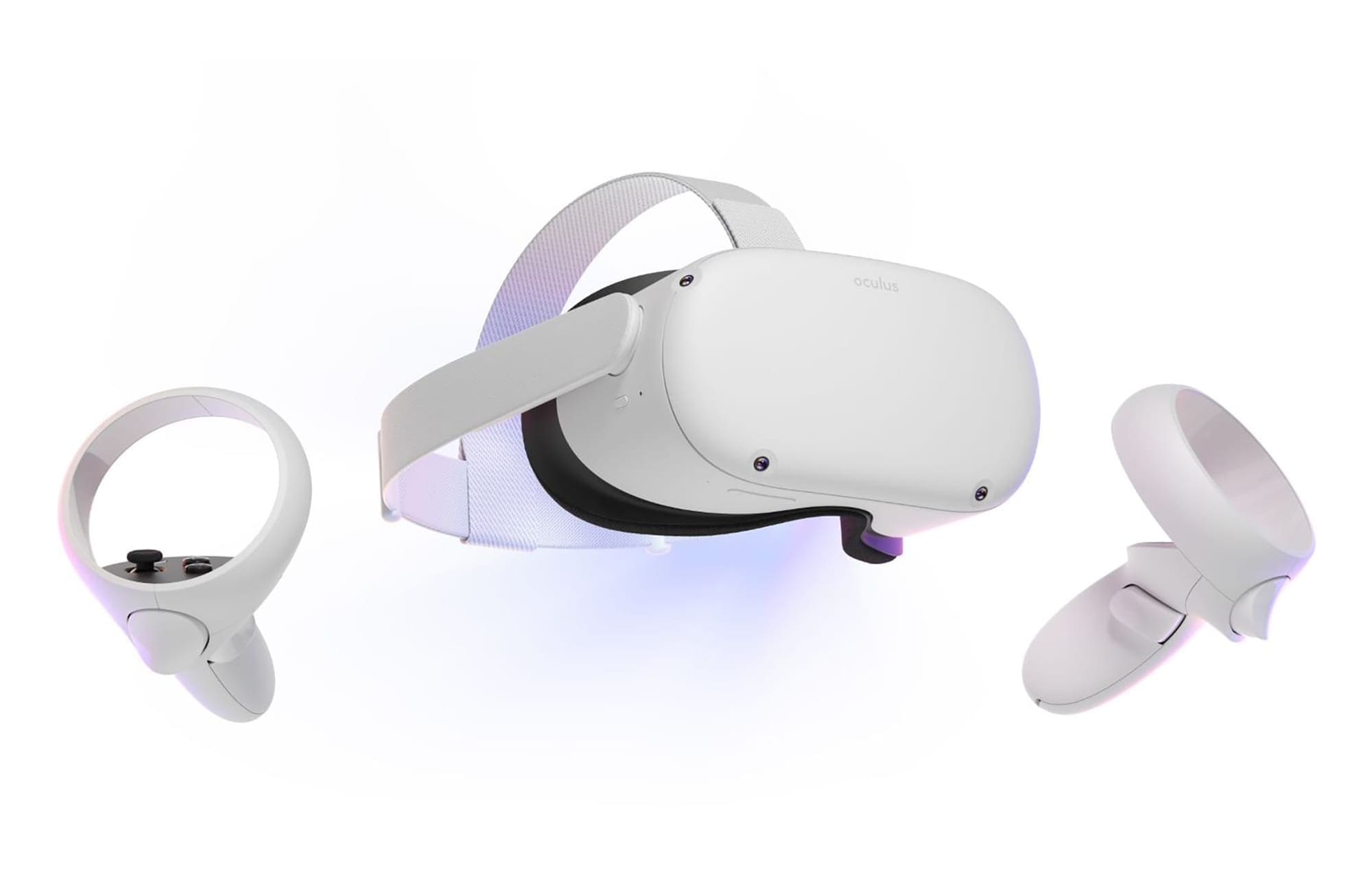

Another excellent example of the usefulness of a buffer is in rendering images on a computer screen. A modern computer screen works by displaying many pictures on the screen every second. Typically 60 distinct photos are used as this allows for smooth motion perception. These images are written to the screen progressively from top to bottom. Though the write time is short.

If you don’t have any graphics buffer, you can end up with the image being written to the screen changing part way through writing it to the screen. This may not be noticed on static content such as your desktop or an unmoving web page. But if there is a significant enough change, as is common in video content and video games, you may notice screen tearing. The tear is simply the switch between the previous and current image. The effect can be very noticeable, distracting, and unpleasant.

By implementing a frame buffer, the computer can queue up the next frame to be displayed. The screen can just read it when it’s ready. However, a single buffer can lead to the GPU sitting idle. Unable to start outputting the next frame as the monitor hasn’t read the last one from the buffer. To get around this, double or even triple buffering is used where one buffer can always be kept filled with the next frame to be written to the screen, while the other buffer or buffers are updated with the latest content as fast as possible.

Conclusion

A buffer is a portion of memory, either permanently set aside or temporarily used, to store data to ensure that it is fully processed, read, or written by the intended component. This functionality is especially critical when dealing with a device slower than the transmission media to the device or when there can be a delay.

Buffers generally help to enable significant optimizations of processes, though they can add a minor delay to individual operations. Knowing that data in a buffer typically hasn’t yet been protected from a power loss event is essential. While the time data spends in a buffer is relatively short, much data go through the buffer, meaning there can be a risk of data loss.